One of the greatest technological challenges associated with implementing machine-learning algorithms for bona fide commercial applications is tracking systematic (data-collection sourced) and computational (algorithmic) errors in real time. This is especially relevant in the areas of obstacle avoidance, where transient faults can compromise lives, and leave a company vulnerable to anti-money laundering and fraud. Leading software companies, such as Capgemini and McKinsey (open), which develop AML AI based solutions, claim to have unfixable error margins of 5% to 10%.

The technological objective of this project was to develop an approach to fix from 1% to 10% of unfixable error margins of computational (algorithmic) errors in real time where all other standard attempts have failed. The intent in implementing this approach would be to fix those “unfixable errors” in systems such as driverless systems, cyber security, and banking systems that require AI like AML. This goal was well beyond any technology available to the company, and knowledge on how to do it did not exist or wasn’t available through the public domain prior to the beginning of this project.

What are Scientific and Technological challenges Refyn engineers had?

The main technological obstacle developers faced was how to most effectively use explainability to purge unfixable errors from the output datasets. The team hypothesized that the previously AI-based search API developed by the Refyn (https://refyn.org/) might achieve the noise reduction necessary to cure their datasets of discrepant information.

Initially, a battery of systematic tests was conceived and designed in order to ensure the robustness of the algorithm both locally and over the entire domain. The first set of tests involved the implementation of a standard set of linear and mixed-effect regression techniques in order to estimate the root-mean-squared (RMS) discrepancies between forecast and actually observed values. However, conclusive results of these standard tests proved to be elusive as quantitative outliers overstated error-handling calls to the Refyn API resulting in performance degradation. Developers concluded that they needed to generate new knowledge about how CAI interacts with searchable source inputs before further such testing could continue. Since this information couldn’t be found in online or company resources, experimentation would be needed to generate the needed knowledge.

Our engineers, therefore, decided to implement an approach that parsed classifiers for a blend of parameters that included accuracy, specificity, precision, and recall. They assumed that the Refyn API would achieve an improvement with respect to one or more of the parameters, thereby achieving a reduction in the recorded number of false positives and/or false negatives. Binary and multiclass classification did, indeed, render a superior performance over the first set of test results; however, prescribing the correlation function criteria for calling the Refyn API proved to be an obstacle to generalizing the implementation. The assumption that a 0.8 cutoff would bypass extraneous error handling proved to be incorrect in all but the most trivial cases. Determining an appropriate cutoff requires a prohibitive amount of computational resources, which is beyond the storage and performance limitations of Search3w’s infrastructure.

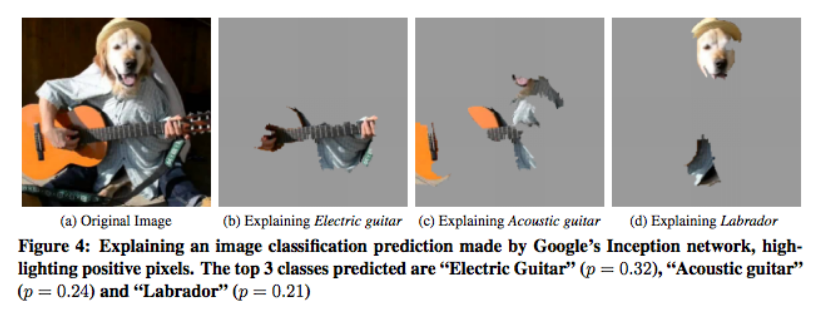

Developers decided that a more localized description of the domain sensitivity to perturbation was required. By interpolating features rather than obtaining bulk dataset descriptions at every data point in the domains, the team suspected that Refyn could be implemented more selectively to discriminate signals in the AI database. They used a model called LIME (Local Interpretable Model-Agnostic Explanations), a technique that provides an explanation of predictions of any machine learning classifier, so the predictions can be evaluated for their usefulness and accuracy, which is then used to diagnose the sensitivity of domain features to inconsequential changes. This further allows the error handler to strip outliers from the computation of the RMS error or weight them accordingly. This significantly improved the robustness of the error handler.

However, the AI systems still suffered from a high rate of errors after integrating LIME and the reason for this was not immediately obvious. Experimentation and analysis revealed that the LIME model is based upon the decomposition of the domain into interpretable components, thereby suppressing or diminishing perturbations of the original instance. And when the model interacts with Refyn, another black box model, the resulting noise is damped sequentially. After each iteration, LIME’s failure (e.g. too much noise in the original instance) was offset by the error handling capacities of Refyn. This was done in a targeted manner rather than exhaustively.

Status of the project

Refyn team had developed a way to integrate LIME and Refyn, and generated new knowledge about how to integrate LIME’s model with noise dampening technology to more accurately handle errors, information about which was not available from the public domain.

Our engineers achieved a technological advancement through developing a prototype of an engine for fixing 1% to 10% of unfixable error margins of computational (algorithmic) errors in real time when all other known standard attempts have failed. This was only possible after the team generated new knowledge about overcoming limitations of LIME (Local Interpretable Model-Agnostic Explanations) model, which is based upon the decomposition of the domain into interpretable components, thereby suppressing or diminishing perturbations of the original instance, and integrating LIME model and Refyn AI-based search API. The new knowledge acquired enabled implementation of an integrated LIME model that more accurately handled errors.